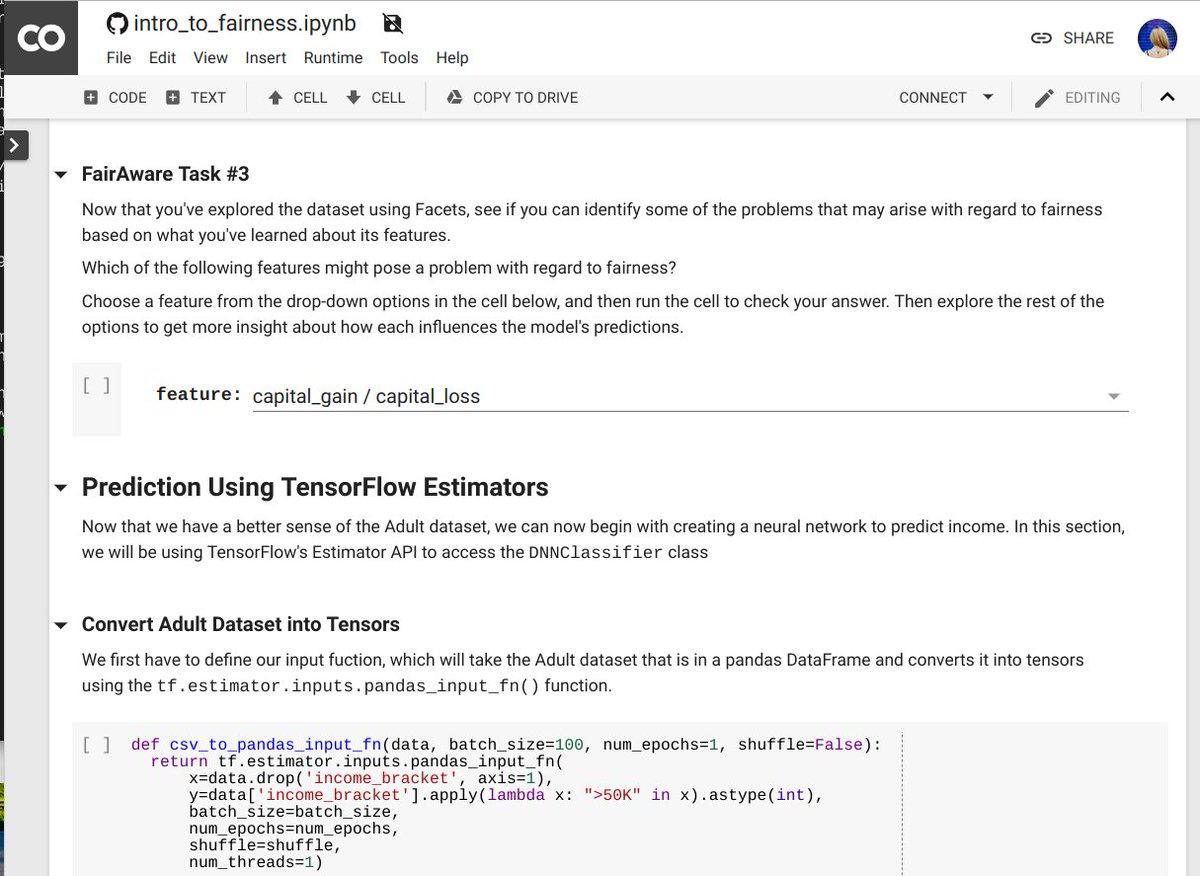

Built a handwritten digit recognition algorithm using neural networks. Trained it. Tested it. Understanding the concepts - a thread.

colab.research.google.com/drive/1-neDSoL…

#100DaysOfMLCode @myorwah @esemejeomole

output error = intended output - actual output.

So it means, Jay must meet that level for her to believe him.

The number of times we relay our message (input) is called an epoch. We can have 5 epochs so as to improve our output. And the process of relaying the message is called training.

Input layer: is the entrance of the neural network

Hidden layer: where communication (learning) happens

Output layer: where the results happen

Output error: intended output - actual error

Learning rate: moderating factor used to minimize the error

Epoch: number of times a training is carried out.

Codes: colab.research.google.com/drive/1-neDSoL… cc @sirajraval @BecomingDataSci #SoDS

All credit goes to @rzeta0 for his book: tinyurl.com/yczslhpz