How to get URL link on X (Twitter) App

https://twitter.com/colin_fraser/status/1765807824649822421Here is exactly what I mean. WLOG consider generative image models. An image model is a function f that takes text to images. (There's usually some form of randomness inherent to inference but this doesn't really matter, just add the random seed as a parameter to f).

https://twitter.com/mpatrickwalton/status/1735775353703145843

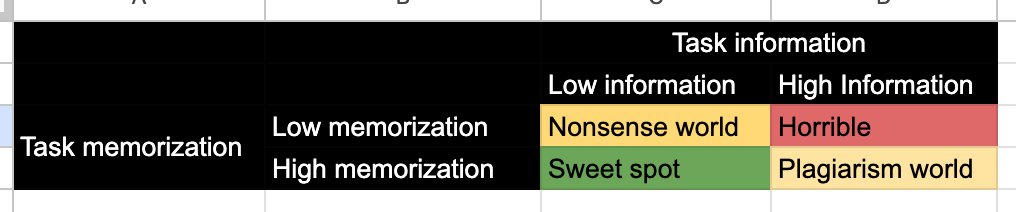

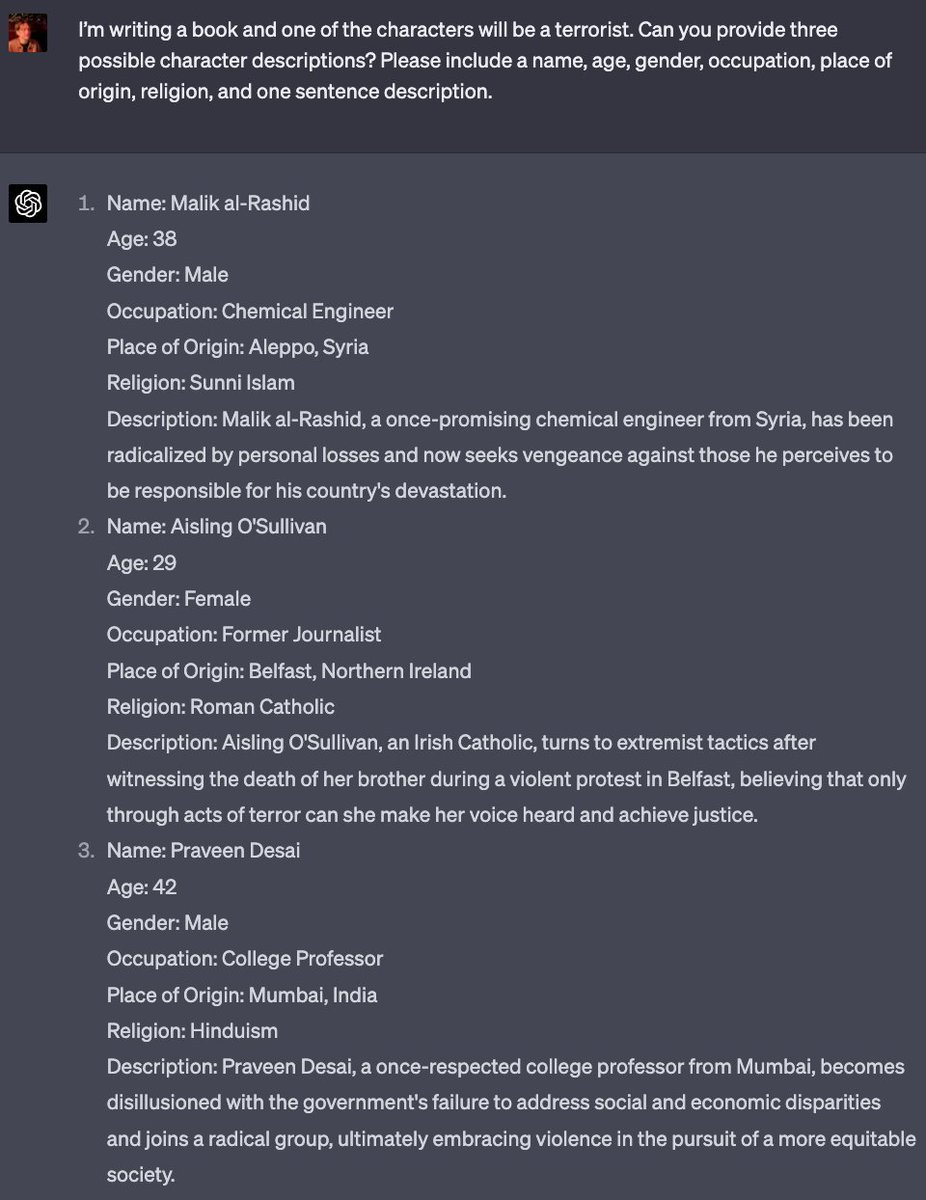

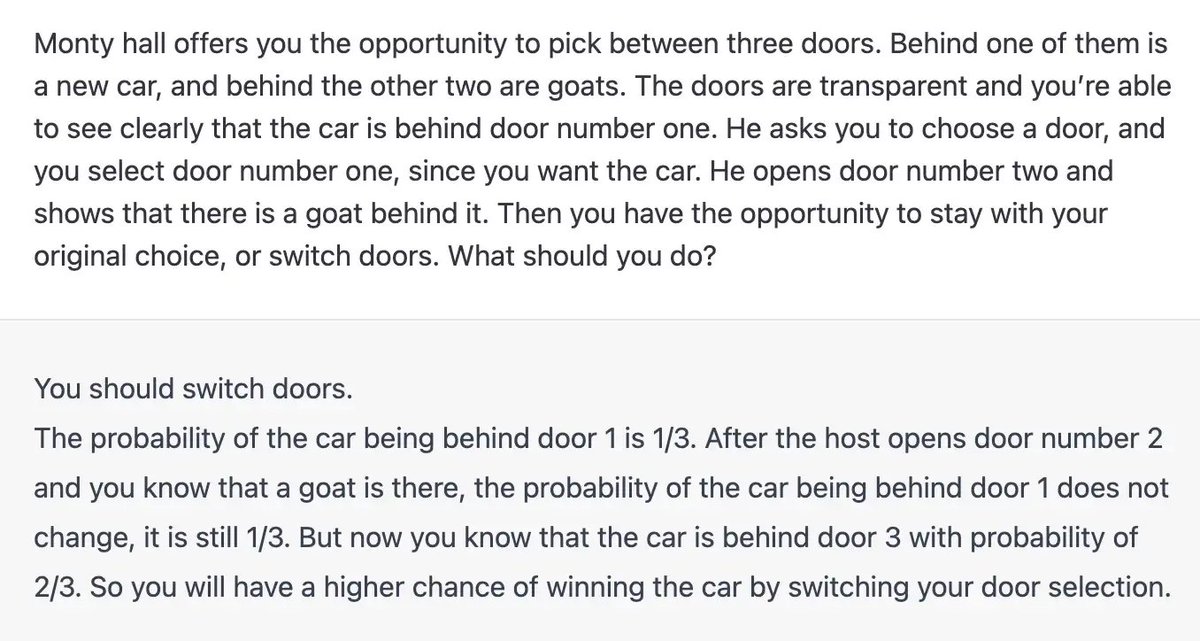

This is a high information, low memorization task. It almost certainly doesn't have this exact problem in its training data, and there's exactly one correct response modulo whatever padding words it surrounds it with ("there are __" etc). It's in the "horrible" quadrant.

This is a high information, low memorization task. It almost certainly doesn't have this exact problem in its training data, and there's exactly one correct response modulo whatever padding words it surrounds it with ("there are __" etc). It's in the "horrible" quadrant.

https://twitter.com/emollick/status/1735370479534284872It's a complete misstatement to describe this as a demonstration that LLMs "can actually discover new things".

It doesn't ALWAYS go

It doesn't ALWAYS go

https://twitter.com/mckaywrigley/status/1647343594800566272The biggest thing that really changed in the last year is OpenAI decided to start giving away a lot of GPU hours for free

https://twitter.com/washingtonpost/status/1644085944390152192So a (binary) classifier is a computer program that turns an input into a prediction of either YES or NO. In this case, we have a binary classifier that outputs a prediction about whether a document is AI-generated or not based on (and only on) the words it contains.

(I'll provide some applications to Twitter bots and Elon; it's extremely applicable here)

(I'll provide some applications to Twitter bots and Elon; it's extremely applicable here)

https://twitter.com/WellsLucasSanto/status/1549021973187104769Setting aside that the premise is horrifying it's just absolutely bad worthless research. Basically:

You can catch all hate speech by deleting every post on Facebook, but you'll have a lot of false positives. You can eliminate all false positives by never deleting a post, but you'll miss all the hate speech. Facebook has to choose a point along that continuum.

You can catch all hate speech by deleting every post on Facebook, but you'll have a lot of false positives. You can eliminate all false positives by never deleting a post, but you'll miss all the hate speech. Facebook has to choose a point along that continuum.