Discover and read the best of Twitter Threads about #AlphaZero

Most recents (3)

Excited to share our work published in the @Nature cover, which surpasses human knowledge in a 50-year-old open question in Mathematics!

We introduce #AlphaTensor, an #AlphaZero-based RL agent that discovers faster exact matrix multiplication algorithms.

🧵1/

We introduce #AlphaTensor, an #AlphaZero-based RL agent that discovers faster exact matrix multiplication algorithms.

🧵1/

Matrix Multiplication (MatMul) is one of the root node problems where any speedup results in improvements in many areas, including Matrix Inversion, Factorization, & Neural Networks.

In industry, MatMul is used for image processing, speech recognition, computer graphics, etc. 2/

In industry, MatMul is used for image processing, speech recognition, computer graphics, etc. 2/

We formulated the problem of finding MatMul algorithms as a single-player game. In each episode, the agent starts from a tensor representing a MatMul operator and has to find the shortest path to an all-zero tensor. The length of this path corresponds to the # multiplications. 3/

Do #RL models have scaling laws like LLMs?

#AlphaZero does, and the laws imply SotA models were too small for their compute budgets.

Check out our new paper:

arxiv.org/abs/2210.00849

Summary 🧵(1/7):

#AlphaZero does, and the laws imply SotA models were too small for their compute budgets.

Check out our new paper:

arxiv.org/abs/2210.00849

Summary 🧵(1/7):

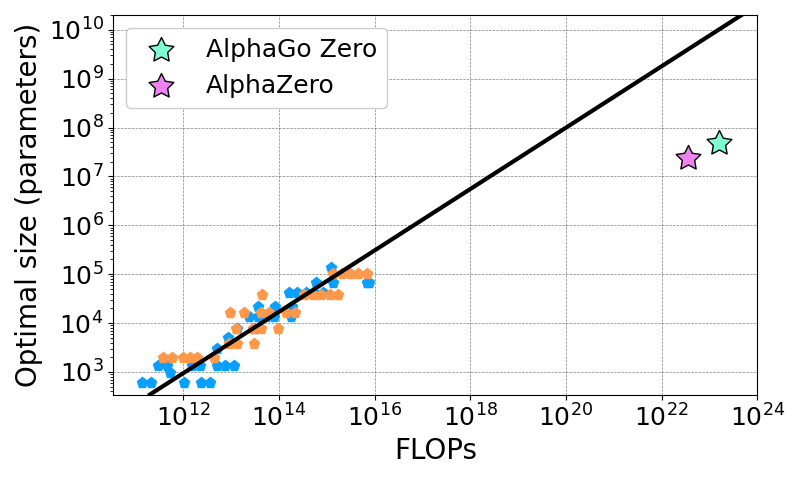

We train AlphaZero MLP agents on Connect Four & Pentago, and find 3 power law scaling laws.

Performance scales as a power of parameters or compute when not bottlenecked by the other, and optimal NN size scales as a power of available compute. (2/7)

Performance scales as a power of parameters or compute when not bottlenecked by the other, and optimal NN size scales as a power of available compute. (2/7)

Looking for a few more favourite resources from the team? Today’s #AtHomeWithAI picks are from software engineer Julian Schrittwieser (@Mononofu), one of the team behind #AlphaZero!(1/6)

Many people are now moving code into Jax. To get up to speed, Julian suggests exploring the DeepMind Haiku library, designed to help you implement deep reinforcement learning algorithms. Explore it here: bit.ly/2zBIkpR #AtHomeWithAI

Fancy a long read? Though the field of DL moves very quickly, @Mononofu considers both good foundational resources:

Neural Networks and DL from @michael_nielsen bit.ly/3ePYa06

DL by @goodfellow_ian, Yoshua Bengio & @AaronCourville bit.ly/351qMzb #AtHomeWithAI

Neural Networks and DL from @michael_nielsen bit.ly/3ePYa06

DL by @goodfellow_ian, Yoshua Bengio & @AaronCourville bit.ly/351qMzb #AtHomeWithAI