Discover and read the best of Twitter Threads about #CausalTwitter

Most recents (4)

Excited to have @mpiccininni3 speaking at the @turinginst causal inference interest group about whether cognitive screening tests should be corrected for age and education

#CIIG #EpiTwitter #CausalTwitter

#CIIG #EpiTwitter #CausalTwitter

DoWhy 0.9 includes some exciting new extensions and features, including better sensitivity analyses, new identification algorithms, and more. I'm particularly excited to see so many new contributors joining in on this release!

#causality #causalinf #causaltwitter

#causality #causalinf #causaltwitter

Ezequiel Smucler (@hazqiyal) adds an identification algorithm to find optimal backdoor adjustment sets that yield estimators with smallest asymptotic variance

pywhy.org/dowhy/v0.9/exa…

pywhy.org/dowhy/v0.9/exa…

Jeffrey Gleason adds e-value sensitivity analysis pywhy.org/dowhy/v0.9.1/e…

and Anusha0409 adds sensitivity analysis for non-parametric estimators: pywhy.org/dowhy/v0.9.1/e…

and Anusha0409 adds sensitivity analysis for non-parametric estimators: pywhy.org/dowhy/v0.9.1/e…

🚨 New blog post (a series!)

An Illustrated Guide to Targeted Maximum Likelihood Estimation 🎯

Part 1: motivation for “targeting” an estimand for inference and why we can & should incorporate data-adaptive/machine learning models

🧵1/

khstats.com/blog/tmle/tuto… #causaltwitter

An Illustrated Guide to Targeted Maximum Likelihood Estimation 🎯

Part 1: motivation for “targeting” an estimand for inference and why we can & should incorporate data-adaptive/machine learning models

🧵1/

khstats.com/blog/tmle/tuto… #causaltwitter

Part 2: step-by-step explanations of the TMLE algorithm for a binary exposure and outcome using words, equations, #rstats code, and colored boxes™️

This corresponds to a printable TMLE “cheat sheet”

khstats.com/blog/tmle/tuto…

2/

This corresponds to a printable TMLE “cheat sheet”

khstats.com/blog/tmle/tuto…

2/

Part 3: The statistical properties of TMLE 🧚🏽♂️

Double robustness! Efficiency! Explanations plus a brief outline of why TMLE works & lots of references to learn more if you‘d like.

khstats.com/blog/tmle/tuto…

3/

Double robustness! Efficiency! Explanations plus a brief outline of why TMLE works & lots of references to learn more if you‘d like.

khstats.com/blog/tmle/tuto…

3/

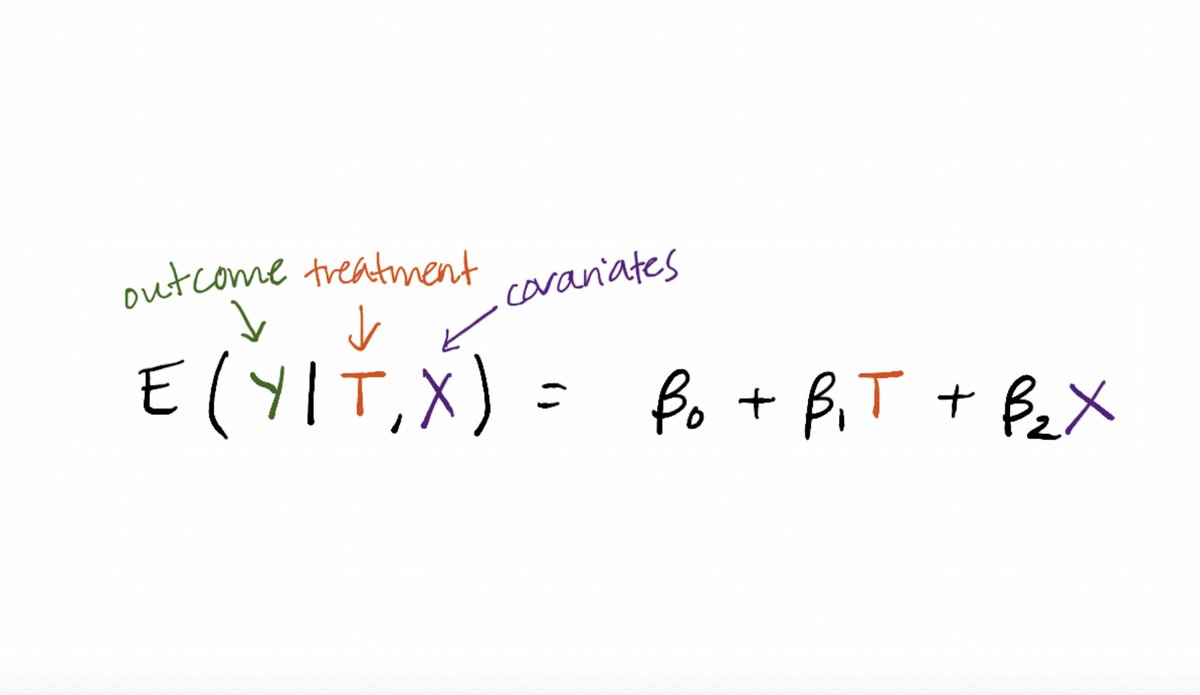

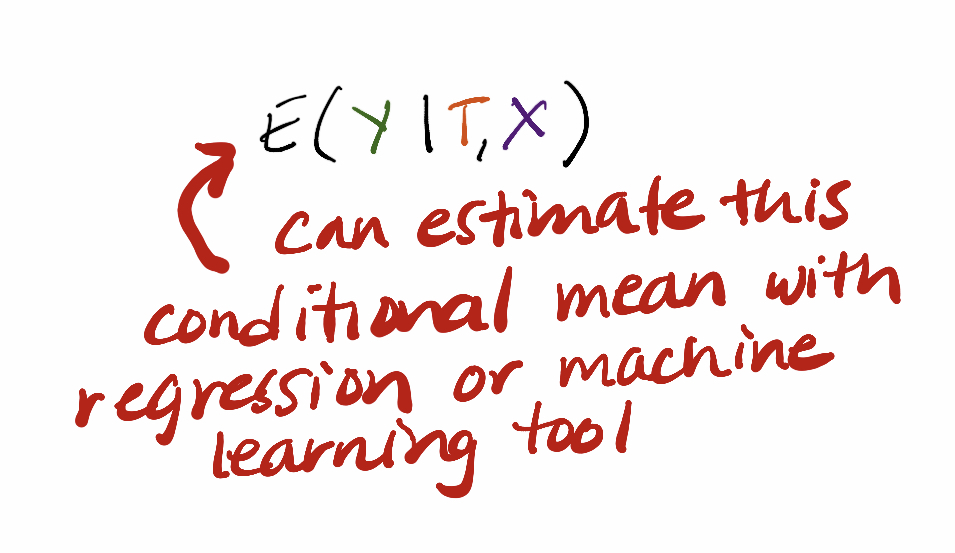

Tweetorial on going from regression to estimating causal effects with machine learning.

I get a lot of questions from students regarding how to think about this *conceptually*, so this is a beginner-friendly #causaltwitter high-level overview with additional references.

I get a lot of questions from students regarding how to think about this *conceptually*, so this is a beginner-friendly #causaltwitter high-level overview with additional references.