Discover and read the best of Twitter Threads about #EMNLP2022

Most recents (6)

My co-lead @KaufCarina and I present: an in-depth investigation of event plausibility judgments in language models.

A 🧵 1/

arxiv.org/abs/2212.01488

A 🧵 1/

arxiv.org/abs/2212.01488

Knowledge of event schemas is a vital component of world knowledge. How much of it can be acquired from text corpora via the word-in-context prediction objective?

2/

2/

We test this Q using a controlled minimal pairs paradigm, with simple event descriptions. We manipulate plausibility by swapping the agent and the patient (The teacher bought the laptop / The laptop bought the teacher) or changing the patient (The actor won the award/battle). 3/

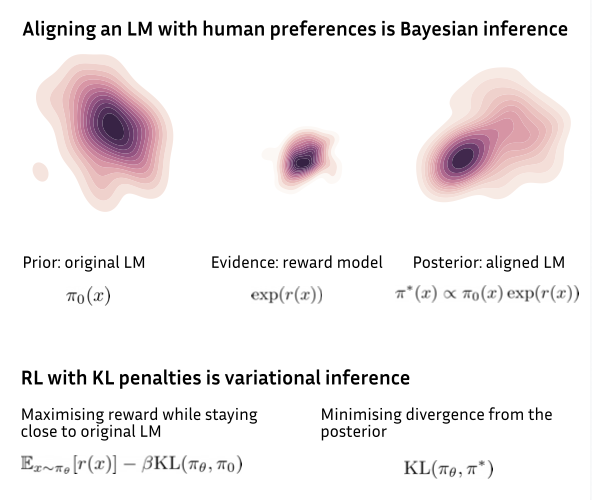

RL with KL penalties – a powerful approach to aligning language models with human preferences – is better seen as Bayesian inference. A thread about our paper (with @EthanJPerez and @drclbuckley) to be presented at #emnlp2022 🧵arxiv.org/pdf/2205.11275… 1/11

@EthanJPerez @drclbuckley RL with KL penalties is a powerful algorithm behind RL from human feedback (RLHF), the methodology heavily used by @OpenAI (InstructGPT), @AnthropicAI (helpful assistant) and @DeepMind (Sparrow) for aligning LMs with human preferences such as being helpful and avoiding harm. 2/14

@EthanJPerez @drclbuckley @OpenAI @AnthropicAI @DeepMind Aligning language models through RLHF consists of (i) training a reward model to predict human preference scores (reward) and (ii) fine-tuning a pre-trained LM to maximise predicted reward. 3/14

📣 9 papers accepted at #emnlp2022 (7 main conference + 2 Findings)

🧵 with links to camera ready preprints 👇

🧵 with links to camera ready preprints 👇

1) “Does Corpus Quality Really Matter for Low-Resource Languages?”

We introduce a new corpus for Basque that has a higher quality according to annotators, but find that this improvement does not carry over to downstream NLU tasks.

arxiv.org/abs/2203.08111

We introduce a new corpus for Basque that has a higher quality according to annotators, but find that this improvement does not carry over to downstream NLU tasks.

arxiv.org/abs/2203.08111

2) “Efficient Large Scale Language Modeling with Mixtures of Experts”

We study how MoE LMs scale in comparison with dense LMs in a wide range of settings. MoEs are more efficient, but their advantage reduces at scale and varies greatly across tasks!

arxiv.org/abs/2112.10684

We study how MoE LMs scale in comparison with dense LMs in a wide range of settings. MoEs are more efficient, but their advantage reduces at scale and varies greatly across tasks!

arxiv.org/abs/2112.10684

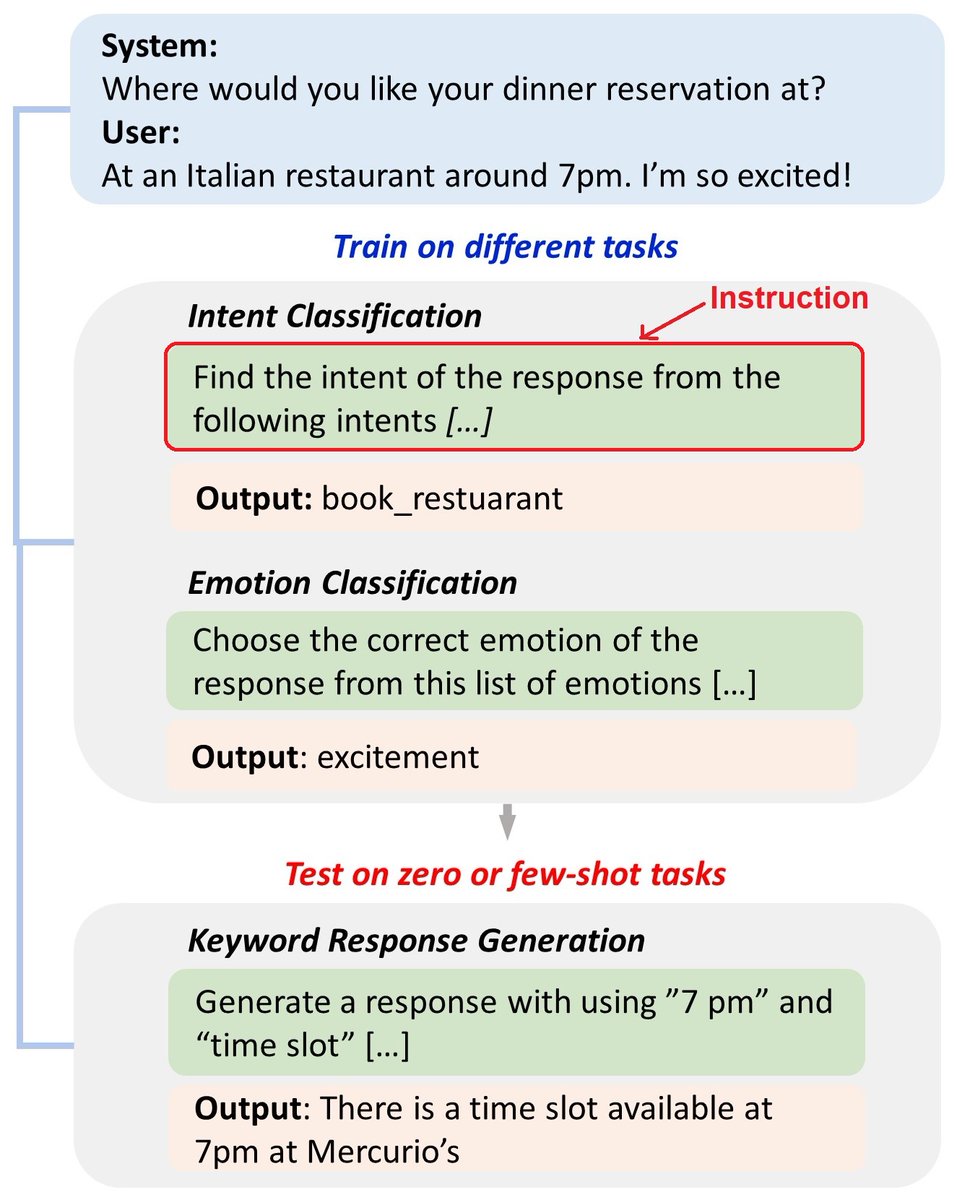

Can instruction tuning improve zero and few-shot performance on dialogue tasks? We introduce InstructDial, a framework that consists of 48 dialogue tasks created from 59 openly available dialogue datasets

#EMNLP2022🚀

Paper 👉 arxiv.org/abs/2205.12673

Work done at @LTIatCMU

🧵👇

#EMNLP2022🚀

Paper 👉 arxiv.org/abs/2205.12673

Work done at @LTIatCMU

🧵👇

Instruction tuning involves fine-tuning a model on a collection of tasks specified through natural language instructions (T0, Flan models). We systematically studied instruction tuning for dialogue tasks and show it works a lot better than you might expect!

New work! :D

We show evidence that DALL-E 2, in stark contrast to humans, does not respect the constraint that each word has a single role in its visual interpretation.

Work with @ravfogel and @yoav.

BlackboxNLP @ #emnlp2022

Below, "a person is hearing a bat"

We show evidence that DALL-E 2, in stark contrast to humans, does not respect the constraint that each word has a single role in its visual interpretation.

Work with @ravfogel and @yoav.

BlackboxNLP @ #emnlp2022

Below, "a person is hearing a bat"

We detail three types of behaviors that are inconsistent with the single role per word constraint

Want a performant, freely available search engine for chemical synthesis protocols? Check out our #EMNLP2022 demo paper: “SynKB: Semantic Search for Synthetic Procedures”. 👇

Demo: tinyurl.com/synkb

Paper: arxiv.org/abs/2208.07400

Code: github.com/bflashcp3f/Syn…

(1/n)

Demo: tinyurl.com/synkb

Paper: arxiv.org/abs/2208.07400

Code: github.com/bflashcp3f/Syn…

(1/n)

In this paper, we present SynKB, the largest open-source, automatically extracted knowledge base of chemical synthesis protocols, which searches over 6 million chemical synthesis procedures collected from patents.

(2/n)

(2/n)