Discover and read the best of Twitter Threads about #jax

Most recents (13)

There's a new programming language in town - it's Mojo! I'm more than a little excited about it. It's Python, but with none of Python's problems.

You can write code as fast as C, and deploy small standalone applications like C.

My post is below, and a 🧵

fast.ai/posts/2023-05-…

You can write code as fast as C, and deploy small standalone applications like C.

My post is below, and a 🧵

fast.ai/posts/2023-05-…

Python is the language that I have used for nearly all my work over the last few years. It is a beautiful language. It has an elegant core on which everything else is built.

But it comes with a downside: performance. It's thousands of times slower than C.

But it comes with a downside: performance. It's thousands of times slower than C.

Python programmers learn to avoid using Python for the implementation of performance-critical sections, instead using Python wrappers over C, FORTRAN, Rust, etc.

But this “two-language” approach has serious downsides. It's complex, hard to debug or profile, & hard to deploy.

But this “two-language” approach has serious downsides. It's complex, hard to debug or profile, & hard to deploy.

Fresh!!

Fully differentiable Hückel model using #JAX.

i) We optimize the atom types for different molecular frameworks to find the molecule with the targeted property (HOMO-LUMO) 🧵 (1/3)

@kjelljorner @robpollice @A_Aspuru_Guzik @chemuoft

#compchem

Fully differentiable Hückel model using #JAX.

i) We optimize the atom types for different molecular frameworks to find the molecule with the targeted property (HOMO-LUMO) 🧵 (1/3)

@kjelljorner @robpollice @A_Aspuru_Guzik @chemuoft

#compchem

ii) Parameter optimization a la machine learning for diff. molecular property, for example, polarizability

30% improvement with only 100 DFT training data.

🧵 (2/3)

30% improvement with only 100 DFT training data.

🧵 (2/3)

#arxiv arxiv.org/abs/2211.16763 #github

(inverse molecular design)

github.com/RodrigoAVargas…

(parameter optimization)

github.com/RodrigoAVargas…

🧵 (3/3)

(inverse molecular design)

github.com/RodrigoAVargas…

(parameter optimization)

github.com/RodrigoAVargas…

🧵 (3/3)

2/2 4 pm EST: Estos son los mensajes clave para la tormenta subtropical #Nicole. Consulte hurricanes.gov para obtener más detalles.

"Eqxvision" is a new #JAX library for computer vision!⚡️

Reddit: reddit.com/r/MachineLearn…

GitHub: github.com/paganpasta/eqx…

Built by @|paganpasta, it's now at feature-parity with torchvision for classification models. (With segmentation, object detection etc. on the way!)

1/2

Reddit: reddit.com/r/MachineLearn…

GitHub: github.com/paganpasta/eqx…

Built by @|paganpasta, it's now at feature-parity with torchvision for classification models. (With segmentation, object detection etc. on the way!)

1/2

It's been super cool watching this project grow, and a big thank-you to @|paganpasta for all their upstream contributions to Equinox. (Read: fixing my bugs!🐛🪲)

2/2

2/2

I guess I'm taking a leaf out of @DynamicWebPaige's book and tweeting about #JAX ecosystem stuff... :D

⚡️ My PhD thesis is on arXiv! ⚡️

To quote my examiners it is "the textbook of neural differential equations" - across ordinary/controlled/stochastic diffeqs.

w/ unpublished material:

- generalised adjoint methods

- symbolic regression

- + more!

arxiv.org/abs/2202.02435

v🧵 1/n

To quote my examiners it is "the textbook of neural differential equations" - across ordinary/controlled/stochastic diffeqs.

w/ unpublished material:

- generalised adjoint methods

- symbolic regression

- + more!

arxiv.org/abs/2202.02435

v🧵 1/n

If you follow me then there's a decent chance that you already know what an NDE is. (If you don't, go read the introductory Chapter 1 to my thesis haha -- it's only 6 pages long.) Put a neural network inside a differential equation, and suddenly cool stuff starts happening.

2/n

2/n

Neural differential equations are a beautiful way of building models, offering:

- high-capacity function approximation;

- strong priors on model space;

- the ability to handle irregular data;

- memory efficiency;

- a foundation of well-understand theory.

3/n

- high-capacity function approximation;

- strong priors on model space;

- the ability to handle irregular data;

- memory efficiency;

- a foundation of well-understand theory.

3/n

Watch me code a Neural Network from Scratch in pure JAX! 🥳 in this 3rd video of the Machine Learning with JAX series.

YouTube:

GitHub: github.com/gordicaleksa/g…

@DeepMind @GoogleAI @jakevdp @froystig @SingularMattrix @cdleary

#jax

YouTube:

GitHub: github.com/gordicaleksa/g…

@DeepMind @GoogleAI @jakevdp @froystig @SingularMattrix @cdleary

#jax

In this video, I build an MLP (multi-layer perception) and train it as a classifier on MNIST (although it's trivial to use a more complex dataset) - all this in pure JAX (no Flax/Haiku/Optax).

2/

2/

I then add cool visualizations such as:

* Visualizing MLP's learned weights

* Visualizing embeddings of a batch of images in t-SNE

* Finally, we analyze the dead neurons

3/

* Visualizing MLP's learned weights

* Visualizing embeddings of a batch of images in t-SNE

* Finally, we analyze the dead neurons

3/

2nd video of the JAX tutorials series is out! 🥳

YT:

Code: github.com/gordicaleksa/g…

@DeepMind @GoogleAI @jakevdp @froystig @SingularMattrix @cdleary @huggingface

#jax

YT:

Code: github.com/gordicaleksa/g…

@DeepMind @GoogleAI @jakevdp @froystig @SingularMattrix @cdleary @huggingface

#jax

In this one, we learn all the necessary components to train complex ML models in parallel on multiple machines.

2/

2/

As always - open up the notebook in Colab to make this an active learning experience (code + video):

github.com/gordicaleksa/g…

3/

github.com/gordicaleksa/g…

3/

End-to-end learning of multiple sequence alignments with differentiable Smith-Waterman

biorxiv.org/content/10.110…

A fun collaboration with Samantha Petti, Nicholas Bhattacharya, @proteinrosh, @JustasDauparas, @countablyfinite, @keitokiddo, @srush_nlp & @pkoo562 (1/8)

biorxiv.org/content/10.110…

A fun collaboration with Samantha Petti, Nicholas Bhattacharya, @proteinrosh, @JustasDauparas, @countablyfinite, @keitokiddo, @srush_nlp & @pkoo562 (1/8)

Toy MuJoCo + Box2d envs in OpenAI Gym are moving to #brax! 100x GPU/TPU speedup + purely pythonic + jax/pytorch-enabled ready to be unleashed! An exciting news for #brax #braxlines #jax teams. Also check out #composer, where I am adding more demos github.com/openai/gym/iss…

#braxlines:

#composer: github.com/google/brax/tr…

arxiv: arxiv.org/abs/2110.04686

#brax still cannot (and probably won't ever) match the full specs with mujoco/pybullet. But esp with open-sourcing plans of mujoco, excited to see where could be synergies.

#composer: github.com/google/brax/tr…

arxiv: arxiv.org/abs/2110.04686

#brax still cannot (and probably won't ever) match the full specs with mujoco/pybullet. But esp with open-sourcing plans of mujoco, excited to see where could be synergies.

Good to see a lot of large-scale, algorithmic deep RL researchers are aligned: "I personally believe that hardware accelerator support is more important, hence choosing Brax."

A thread on our latest optimizers work! We tune Nesterov/Adam to match performance of LARS/LAMB on their more commonly used workloads. We (@jmgilmer, Chris Shallue, @_arohan_, @GeorgeEDahl) do this to provide more competitive baselines for large-batch training speed measurements

We are **not** trying to prove that any optimizer is better than any other (more on that later). However, we believe that well tuned baselines are very important, especially in optimization where there are so many confounding factors.

In general, we found that LR schedules were the most impactful part of the pipeline to tune (that is, once we got all the model and dataset details to be the same!).

The Lie group SU(N) is very important in fundamental particle physics as a Gauge symmetry group. Building SU(N) Gauge-equivariant models is key to make ML useful in this field. It was not easy, but WE DID IT!

SU(N) gauge equivariant flows for LatticeQCD:

arxiv.org/abs/2008.05456

SU(N) gauge equivariant flows for LatticeQCD:

arxiv.org/abs/2008.05456

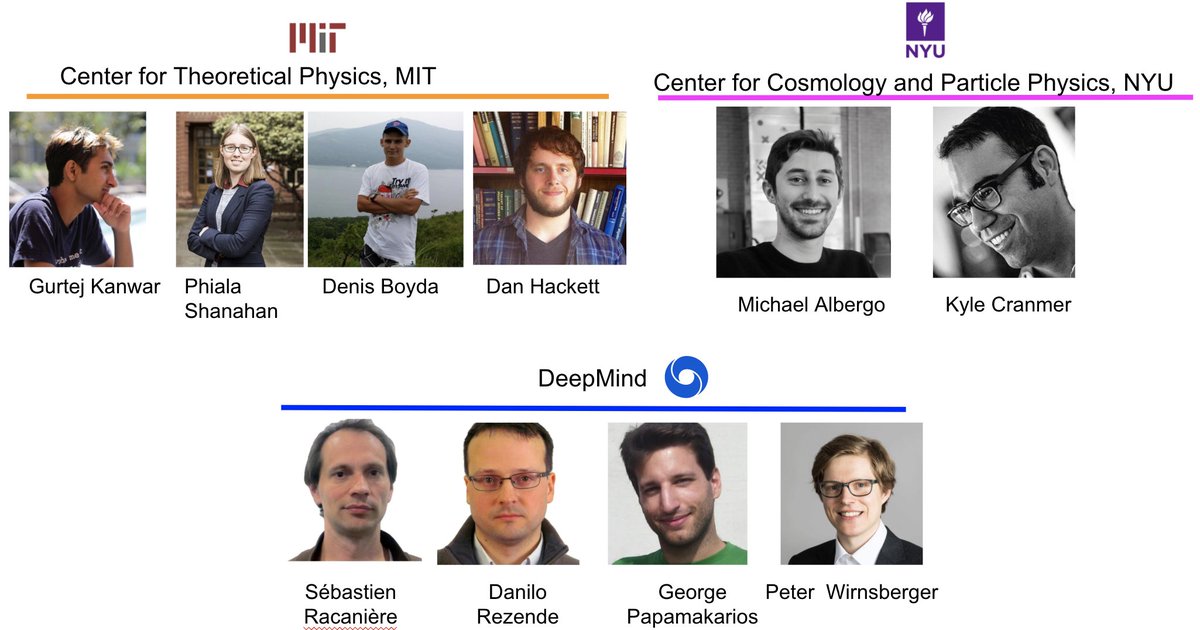

This work is the result of the most amazing collaboration between @MIT, @nyuniversity and @DeepMind and I couldn't be more thankful and proud to have the opportunity to work with this team.

The team: Denis Boyda, Gurtej Kanwar, @sracaniere,@DaniloJRezende,@msalbergo,@kylecranm, Daniel Hackett, Phiala Shanahan

📢📢Dopamine now also runs on #JAX !!📢📢

Happy to announce that our #RL library, dopamine, now also has JAX implementations of all our agents, including a new agent: QR-DQN!

github.com/google/dopamine

1/X

Happy to announce that our #RL library, dopamine, now also has JAX implementations of all our agents, including a new agent: QR-DQN!

github.com/google/dopamine

1/X

The JAX philosophy goes very well with that of Dopamine: flexibility for research without sacrificing simplicity.

I've been using it for a while now and I've found its modularity quite appealing in terms of simplifying some of the more difficult aspects of the agents.

2/X

I've been using it for a while now and I've found its modularity quite appealing in terms of simplifying some of the more difficult aspects of the agents.

2/X

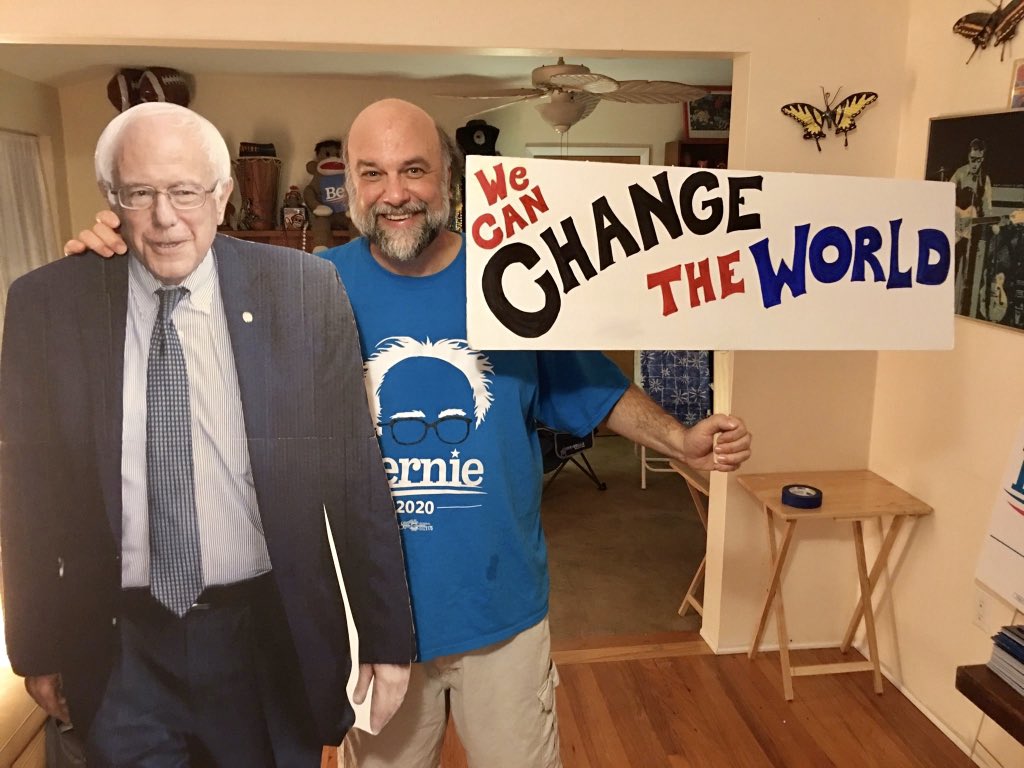

Good morning! We’ve got the incredible @CynthiaNixon firing up our Central Florida grassroots volunteers today! #FloridaForBernie #Bernie2020

Getting ready for the onslaught of volunteers coming who are ready to launch a canvass out of Miami Shores! #FloridaForBernie #Bernie2020

Miami for Bernie volunteer Nikola cast her ballot today for Bernie and brought her daughters to watch their mom vote for not just their future, but also the future of those who are less fortunate. #NotMeUs #IVoted #Bernie2020 #FloridaForBernie