Discover and read the best of Twitter Threads about #BERT

Most recents (24)

Welcome to day 2 of the 5th World Chatbots & Voice Summit, hosted the the beautiful city of #Berlin

I'll be keeping this thread going to cover all the presentations and learnings of the day.

As co-chair, I'll be looking after the chatbot track, today.

#chatbotvoice2023

I'll be keeping this thread going to cover all the presentations and learnings of the day.

As co-chair, I'll be looking after the chatbot track, today.

#chatbotvoice2023

First up, Alin from @SapienceS2P

Talking about how to deliver value and a competitive edge through customised chatbot solutions

SapienceS2P is an #SAP procurement consultancy

Talking about how to deliver value and a competitive edge through customised chatbot solutions

SapienceS2P is an #SAP procurement consultancy

Taking us through their #procurement chatbot, Sapience Satori, which can integrate with #ChatGPT, most SAP APIs and also communication channels - #teams #slack #whatsapp

1/ Elon Musk on ollut merkittävä vaikuttaja Big Datan hyödyntämisessä. Hänen investointinsa Twitteriin ja Teslaan ovat auttaneet keräämään ja käyttämään valtavia määriä dataa. #ElonMusk #Tesla #Twitter #BigData

2/ Microsoft on toinen Big Datan kerääjä ja hyödyntäjä. He ovat keränneet dataa tuotteidensa, kuten Windowsin, Officen ja Androidin, sekä palveluidensa, kuten Bingin, Azuren ja LinkedInin, kautta. #Microsoft #BigData

1/ It's not the size, it's the skill - now releasing #Neeva's Query Embedding Model!

Our query embedding model beats @openai’s Curie which is orders of magnitude bigger and 100000x more expensive. 🤯

Keep reading to find out how... 📖

Our query embedding model beats @openai’s Curie which is orders of magnitude bigger and 100000x more expensive. 🤯

Keep reading to find out how... 📖

2/ Query understanding is the life blood of #searchengines. Large search engines spent millions of SWE hours building various signals like synonymy, spelling, term weighting, compounds, etc.

We don’t have that luxury. 🙄

Fortunately for us, #LLMs are here to build upon.

We don’t have that luxury. 🙄

Fortunately for us, #LLMs are here to build upon.

3/ We solve the problem of #query similarity: when 2 user queries looking for the same information on the web.

Why is this useful? Query-click data for web docs = strongest signal for search, QA, etc.; solving query equivalence => smear click signal over lots of user queries

Why is this useful? Query-click data for web docs = strongest signal for search, QA, etc.; solving query equivalence => smear click signal over lots of user queries

And in my last Twitter thread, I wanted to talk with you about some powerful approaches in #NLP and how we can use both #rstats and #python to unleash them 💪

One possible downside when using the bag of words approach described before is that you often cannot fully take the structure of the language into account (n-grams are one way, but they are often limited).

You also often need many data to successfully train your model - which can be time-consuming and labor intensive. An alternative is to use a pre-trained model. And here comes @Google's famous deep learning model: BERT.

1/ Hearing about #BERT and wondering why #radiologists are starting to talk more about this Sesame Street character?

Check out this #tweetorial from TEB member @AliTejaniMD with recommendations to learn more about #NLP for radiology.

Check out this #tweetorial from TEB member @AliTejaniMD with recommendations to learn more about #NLP for radiology.

2/ #BERT is a language representation model taking the #NLP community by storm, but it’s not new! #BERT has been around since 2018 via @GoogleAI. You may interact with #BERT everyday (chatbots, search tools).

But #BERT is relatively new to healthcare. What makes it different?

But #BERT is relatively new to healthcare. What makes it different?

3/ For starters, #BERT is “bidirectional”: it doesn’t rely on input text sequence (left-to-right or right-to-left). Instead, #Transformer-based architecture provides an #attention mechanism that help the model learn context based on ALL surrounding words.

Can DALLE answer riddles from the Hobbit? Let's find out!

#dalle #openai #experiment #dalle2 #aiart #AiArtwork #hobbit #LOTR #AI

#dalle #openai #experiment #dalle2 #aiart #AiArtwork #hobbit #LOTR #AI

(Except that the answer is mountains, not trees)

Over the past several months, I’ve been doing a deep-dive into transformer language models #NLProc and their applications in #psychology for the last part of my #PhD thesis. 👩🏻🎓 Here are a few resources that I’ve found invaluable and really launched me forward on this project 🧵:

🏃🏻♀️💨 If you already have some data and you want a jump start to classify it all using #transformers, check out @maria_antoniak’s #BERT for Humanists/Computational Social Scientists Talk: Colab notebook and other info: bertforhumanists.org/tutorials/#cla…

🤓 I’m an absolute nerd for stats and experiment design in psych, but doing #ML experiments is very different. A clear, detailed (and reasonable length) course to get up to speed on train-test splits, hyperparameter tuning, etc. and do the very best work: coursera.org/learn/deep-neu…

OPEN BRIEF AAN DE @NOS OVER KINDERMISBRUIK EN MENSENHANDEL. Zoals u weet werd op 4 oktober 2012 (14.00 u lokale tijd) in de Verenigde Staten een hoorzitting gehouden van de Helsinki Commissie onder leiding van congreslid Chris Smith in Washington

Nederland is internationaal gezien een belangrijke en daarmee dus zeer kwalijke rol speelt in de internationale mensenhandel en kindermisbruik. De hoorzitting komt onder meer de rol van #Demmink aan bod die van 2002 tot november 2012 secretaris-generaal van het @ministerieJenV

Het nalaten van berichtgeving hierover heeft de consequentie dat de mensenhandel en het kindermisbruik vrijelijk door kunnen gaan in Nederland. Ik wil hierbij dan ook een beroep doen op het persoonlijke geweten en de

2021's been an eventful year!

- Spoke at @brighonSEO & #TechSEOBoost

- Created seopythonistas.com in @hamletbatista's honour

- Got my 1st #Python contract, then an ace DevRel job at @streamlit 🎈

- Published 7 apps used by SEOs & Data scientists

Apps recap is here! ↓🧵

- Spoke at @brighonSEO & #TechSEOBoost

- Created seopythonistas.com in @hamletbatista's honour

- Got my 1st #Python contract, then an ace DevRel job at @streamlit 🎈

- Published 7 apps used by SEOs & Data scientists

Apps recap is here! ↓🧵

1. Keyword Clustering app

My 1st collab with the great @LeeFootSEO!

Cluster your keywords & calculate:

✓ Traffic/volume per cluster

✓ CPC/KW difficulty

✓ Much more!

🎈 App → share.streamlit.io/charlywargnier…

📰 Post → charlywargnier.com/post/introduci…

⚡ Powered by Polyfuzz

My 1st collab with the great @LeeFootSEO!

Cluster your keywords & calculate:

✓ Traffic/volume per cluster

✓ CPC/KW difficulty

✓ Much more!

🎈 App → share.streamlit.io/charlywargnier…

📰 Post → charlywargnier.com/post/introduci…

⚡ Powered by Polyfuzz

2. What The FAQ

Leverage the power of Google T5 & @huggingface Transformers to generate FAQs directly from URLs! 🤗

🎈 App → share.streamlit.io/charlywargnier…

📰 Post → charlywargnier.com/post/wtfaq-an-…

⚡Powered by NLTK & @Google T5 via @huggingface

Leverage the power of Google T5 & @huggingface Transformers to generate FAQs directly from URLs! 🤗

🎈 App → share.streamlit.io/charlywargnier…

📰 Post → charlywargnier.com/post/wtfaq-an-…

⚡Powered by NLTK & @Google T5 via @huggingface

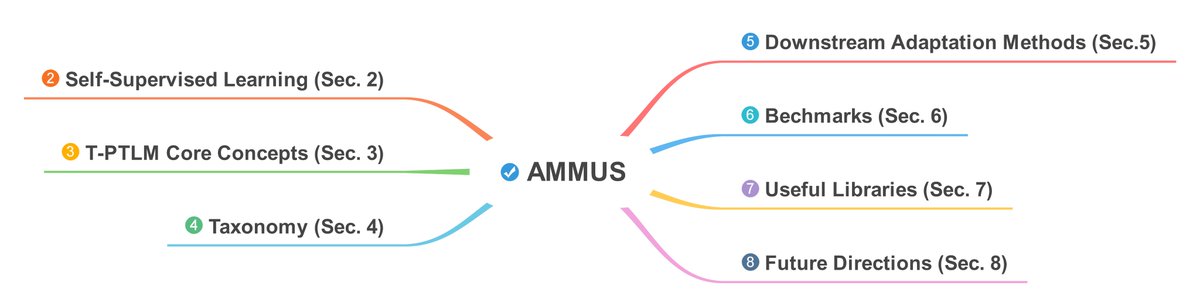

#1 AMMUS- A Survey of Transformer-based Pretrained Language Models #nlproc #nlp #bert #survey

Paper link: arxiv.org/abs/2108.05542

Paper link: arxiv.org/abs/2108.05542

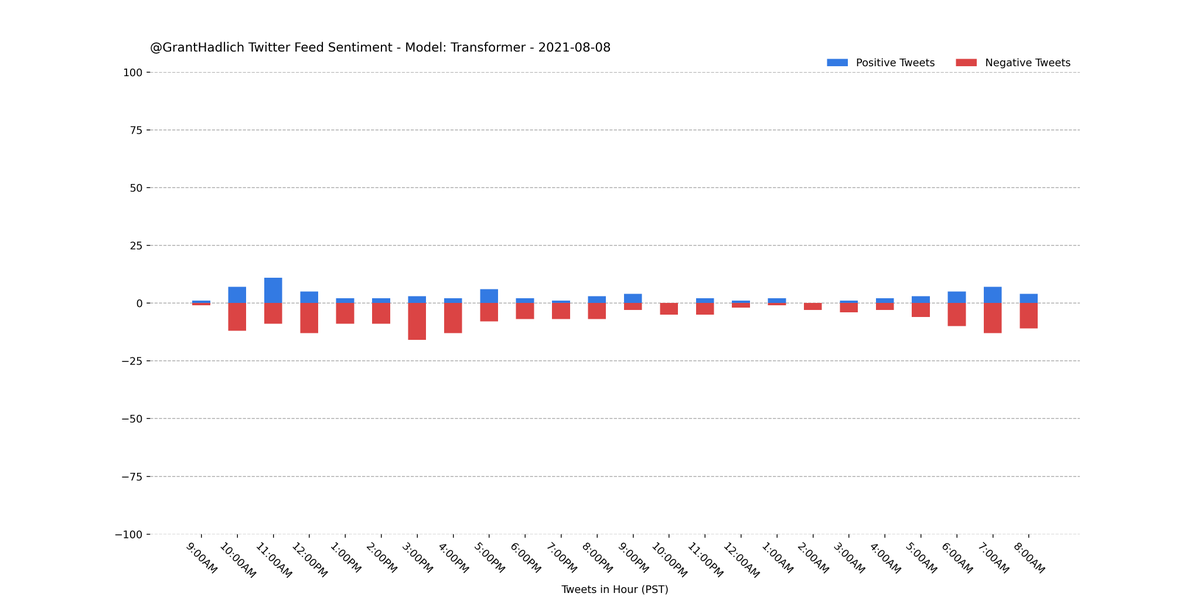

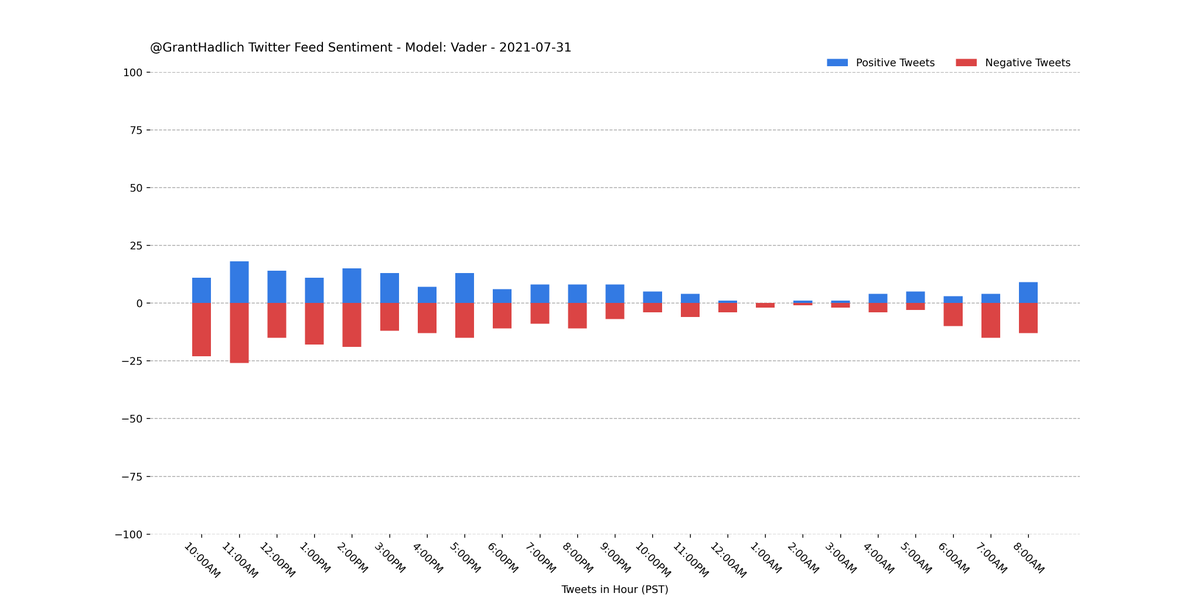

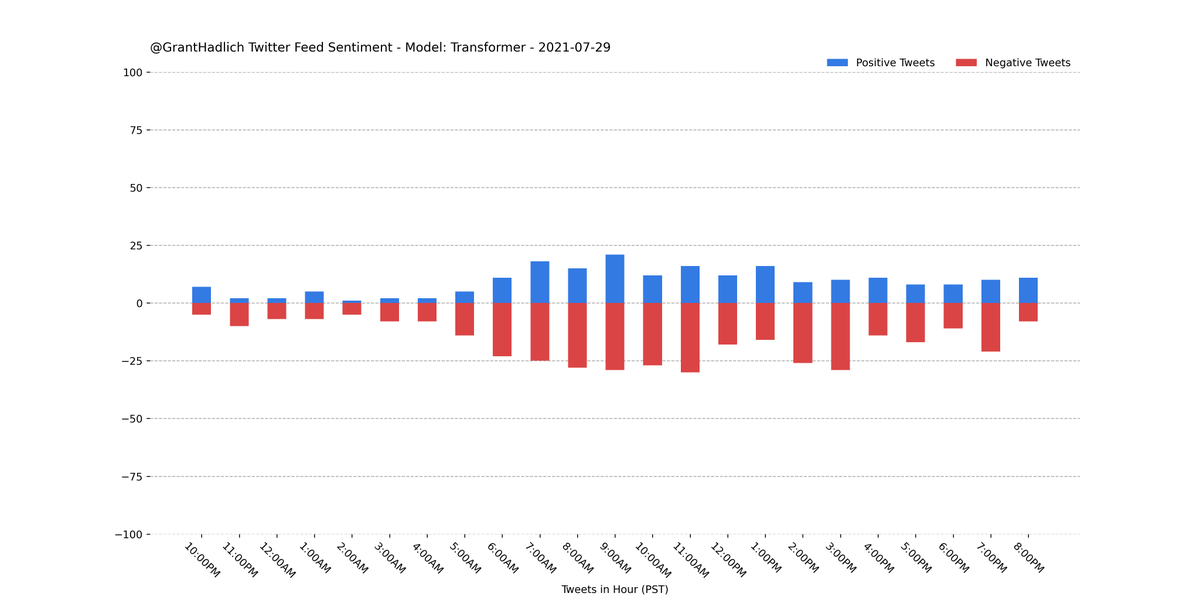

How negative was my Twitter feed in the last few hours? In the replies are a few models that analyze the sentiment of my home timeline feed on Twitter for the last 24 hours using the Twitter API.

GitHub: github.com/ghadlich/Daily…

#NLP #Python

GitHub: github.com/ghadlich/Daily…

#NLP #Python

I analyzed the sentiment on the last 253 tweets from my home feed using a pretrained #BERT model from #huggingface. A majority (70.0%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

I analyzed the sentiment on the last 253 tweets from my home feed using a pretrained #VADER model from #NLTK. A majority (56.1%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

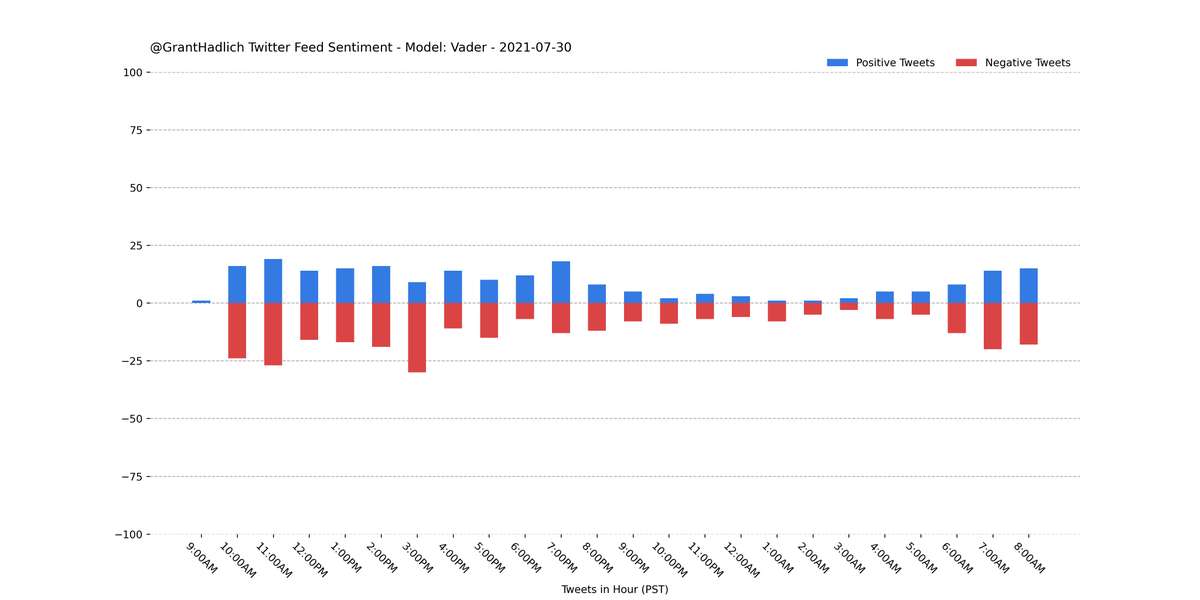

How negative was my Twitter feed in the last few hours? In the replies are a few models that analyze the sentiment of my home timeline feed on Twitter for the last 24 hours using the Twitter API.

GitHub: github.com/ghadlich/Daily…

#NLP #Python

GitHub: github.com/ghadlich/Daily…

#NLP #Python

I analyzed the sentiment on the last 272 tweets from my home feed using a pretrained #BERT model from #huggingface. A majority (69.9%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

I analyzed the sentiment on the last 272 tweets from my home feed using a pretrained #VADER model from #NLTK. A majority (57.0%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

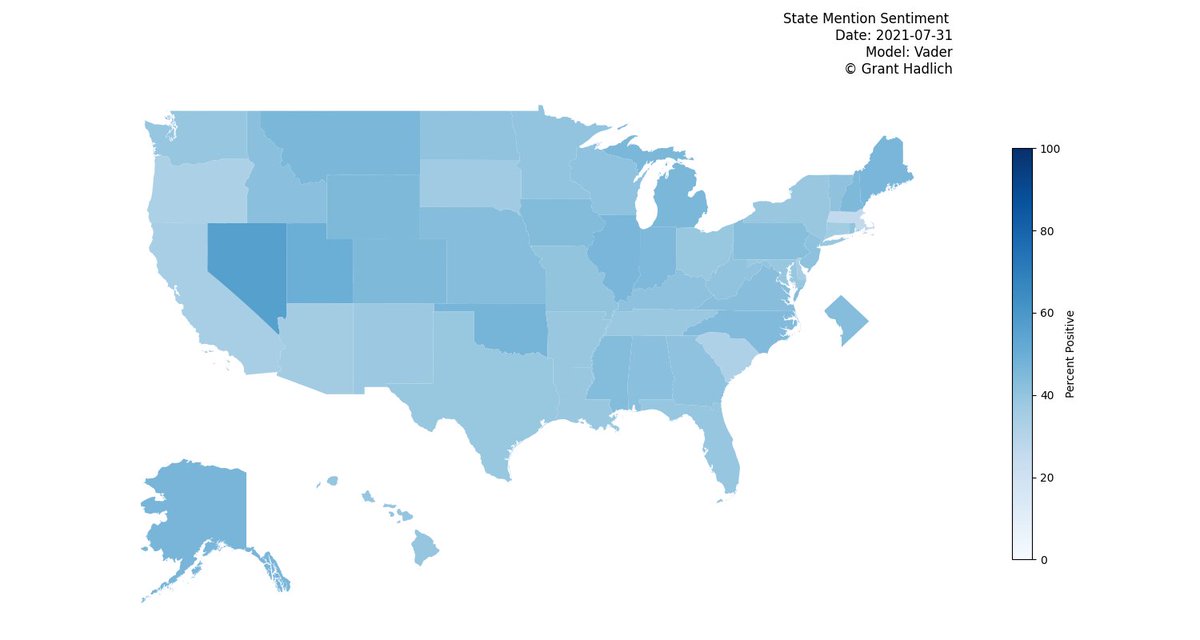

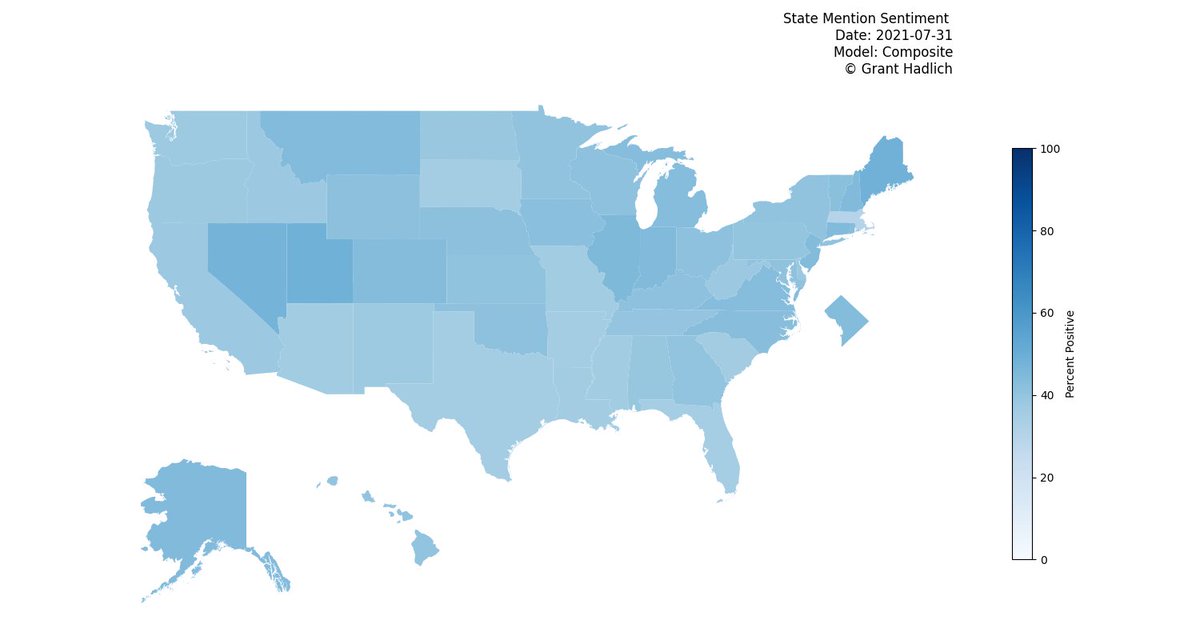

Each week I pull ~51000 tweets on US State mentions and do sentiment analysis. Most positive state was #Maine according to an ensemble model! In the replies are the individual models.

GitHub: github.com/ghadlich/State…

#NLP #Python #ML

GitHub: github.com/ghadlich/State…

#NLP #Python #ML

How negative was my Twitter feed in the last few hours? In the replies are a few models that analyze the sentiment of my home timeline feed on Twitter for the last 24 hours using the Twitter API.

GitHub: github.com/ghadlich/Daily…

#NLP #Python

GitHub: github.com/ghadlich/Daily…

#NLP #Python

I analyzed the sentiment on the last 378 tweets from my home feed using a pretrained #BERT model from #huggingface. A majority (68.0%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

I analyzed the sentiment on the last 378 tweets from my home feed using a pretrained #VADER model from #NLTK. A majority (60.3%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

How negative was my Twitter feed in the last few hours? In the replies are a few models that analyze the sentiment of my home timeline feed on Twitter for the last 24 hours using the Twitter API.

GitHub: github.com/ghadlich/Daily…

#NLP #Python

GitHub: github.com/ghadlich/Daily…

#NLP #Python

I analyzed the sentiment on the last 476 tweets from my home feed using a pretrained #BERT model from #huggingface. A majority (70.0%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

I analyzed the sentiment on the last 476 tweets from my home feed using a pretrained #VADER model from #NLTK. A majority (60.5%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

How negative was my Twitter feed in the last few hours? In the replies are a few models that analyze the sentiment of my home timeline feed on Twitter for the last 24 hours using the Twitter API.

GitHub: github.com/ghadlich/Daily…

#NLP #Python

GitHub: github.com/ghadlich/Daily…

#NLP #Python

I analyzed the sentiment on the last 528 tweets from my home feed using a pretrained #BERT model from #huggingface. A majority (68.0%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

I analyzed the sentiment on the last 528 tweets from my home feed using a pretrained #VADER model from #NLTK. A majority (58.9%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

How negative was my Twitter feed in the last few hours? In the replies are a few models that analyze the sentiment of my home timeline feed on Twitter for the last 24 hours using the Twitter API.

GitHub: github.com/ghadlich/Daily…

#NLP #Python

GitHub: github.com/ghadlich/Daily…

#NLP #Python

I analyzed the sentiment on the last 569 tweets from my home feed using a pretrained #BERT model from #huggingface. A majority (65.4%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

I analyzed the sentiment on the last 569 tweets from my home feed using a pretrained #VADER model from #NLTK. A majority (53.6%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

How negative was my Twitter feed in the last few hours? In the replies are a few models that analyze the sentiment of my home timeline feed on Twitter for the last 24 hours using the Twitter API.

GitHub: github.com/ghadlich/Daily…

#NLP #Python

GitHub: github.com/ghadlich/Daily…

#NLP #Python

I analyzed the sentiment on the last 239 tweets from my home feed using a pretrained #BERT model from #huggingface. A majority (61.1%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

I analyzed the sentiment on the last 239 tweets from my home feed using a pretrained #VADER model from #NLTK. A majority (61.5%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

How negative was my Twitter feed in the last few hours? In the replies are a few models that analyze the sentiment of my home timeline feed on Twitter for the last 24 hours using the Twitter API.

GitHub: github.com/ghadlich/Daily…

#NLP #Python

GitHub: github.com/ghadlich/Daily…

#NLP #Python

I analyzed the sentiment on the last 288 tweets from my home feed using a pretrained #BERT model from #huggingface. A majority (65.6%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

I analyzed the sentiment on the last 288 tweets from my home feed using a pretrained #VADER model from #NLTK. A majority (62.8%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

Each week I pull ~51000 tweets on US State mentions and do sentiment analysis. Most positive state was #Utah according to an ensemble model! In the replies are the individual models.

GitHub: github.com/ghadlich/State…

#NLP #Python #ML

GitHub: github.com/ghadlich/State…

#NLP #Python #ML

How negative was my Twitter feed in the last few hours? In the replies are a few models that analyze the sentiment of my home timeline feed on Twitter for the last 24 hours using the Twitter API.

GitHub: github.com/ghadlich/Daily…

#NLP #Python

GitHub: github.com/ghadlich/Daily…

#NLP #Python

I analyzed the sentiment on the last 412 tweets from my home feed using a pretrained #BERT model from #huggingface. A majority (67.5%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

I analyzed the sentiment on the last 412 tweets from my home feed using a pretrained #VADER model from #NLTK. A majority (59.0%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

How negative was my Twitter feed in the last few hours? In the replies are a few models that analyze the sentiment of my home timeline feed on Twitter for the last 24 hours using the Twitter API.

GitHub: github.com/ghadlich/Daily…

#NLP #Python

GitHub: github.com/ghadlich/Daily…

#NLP #Python

I analyzed the sentiment on the last 499 tweets from my home feed using a pretrained #BERT model from #huggingface. A majority (63.3%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

I analyzed the sentiment on the last 499 tweets from my home feed using a pretrained #VADER model from #NLTK. A majority (58.9%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

How negative was my Twitter feed in the last few hours? In the replies are a few models that analyze the sentiment of my home timeline feed on Twitter for the last 24 hours using the Twitter API.

GitHub: github.com/ghadlich/Daily…

#NLP #Python

GitHub: github.com/ghadlich/Daily…

#NLP #Python

I analyzed the sentiment on the last 517 tweets from my home feed using a pretrained #BERT model from #huggingface. A majority (62.9%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

I analyzed the sentiment on the last 517 tweets from my home feed using a pretrained #VADER model from #NLTK. A majority (58.0%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

How negative was my Twitter feed in the last few hours? In the replies are a few models that analyze the sentiment of my home timeline feed on Twitter for the last 24 hours using the Twitter API.

GitHub: github.com/ghadlich/Daily…

#NLP #Python

GitHub: github.com/ghadlich/Daily…

#NLP #Python

I analyzed the sentiment on the last 600 tweets from my home feed using a pretrained #BERT model from #huggingface. A majority (64.3%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot

I analyzed the sentiment on the last 600 tweets from my home feed using a pretrained #VADER model from #NLTK. A majority (56.7%) were classified as negative.

#Python #NLP #Classification #Sentiment #GrantBot

#Python #NLP #Classification #Sentiment #GrantBot