Discover and read the best of Twitter Threads about #transformers

Most recents (24)

Stocks which made 52 week high today (1/n):

#3MIndia

#ActionConst

#AndhraCement

#ArmanFinancial

#ArrowGreentech

#Arvind

#ArvindSmart

#AshianaHousing

#AsianHotels

#AvalonTechnolo

#BajajAuto

#Stockmarket

#3MIndia

#ActionConst

#AndhraCement

#ArmanFinancial

#ArrowGreentech

#Arvind

#ArvindSmart

#AshianaHousing

#AsianHotels

#AvalonTechnolo

#BajajAuto

#Stockmarket

Stocks which made 52 week high today (2/n):

#Beardsell

#BharatBijlee

#BharatDynamics

#BharatWireRop

#BhartiyaInter

#BirlaCable

#BirlaCorp

#CanFinHomes

#CCLProducts

#CentumElectron

#ChoiceInternat

#Stockmarket

#Beardsell

#BharatBijlee

#BharatDynamics

#BharatWireRop

#BhartiyaInter

#BirlaCable

#BirlaCorp

#CanFinHomes

#CCLProducts

#CentumElectron

#ChoiceInternat

#Stockmarket

Stocks which made 52 week high today (3/n):

#ControlPrint

#Craftsman

#CRISIL

#DalmiaBharat

#DatamaticsGlob

#DeltaCorp

#DevInformation

#DynamicService

#EmkayTaps

#eMudhra

#Stockmarket

#ControlPrint

#Craftsman

#CRISIL

#DalmiaBharat

#DatamaticsGlob

#DeltaCorp

#DevInformation

#DynamicService

#EmkayTaps

#eMudhra

#Stockmarket

Its claimed 🇷🇺 GPS jamming is causing inconveniences for average Russians.

I find this interesting on 2 points…

The wide range of acceptance levels for inconveniencing & imparting costs of war upon average Russians.

The wide range of acceptance levels for THE ACTIONS that… twitter.com/i/web/status/1…

I find this interesting on 2 points…

The wide range of acceptance levels for inconveniencing & imparting costs of war upon average Russians.

The wide range of acceptance levels for THE ACTIONS that… twitter.com/i/web/status/1…

When I argued last summer to destroy the large pumps stealing water from Ukraine to inconvenience occupiers in Crimea and force Moscow to decide between supplying the frontlines/stealing 🇺🇦resources or organizing logistics to supply from other sources, the predominate response… twitter.com/i/web/status/1…

🇷🇺GPS Jamming causing inconveniences in Moscow, or something else?

Frankly, I don’t care, as long as Russians are inconvenienced and bear the cost of their war against Ukraine.

Frankly, I don’t care, as long as Russians are inconvenienced and bear the cost of their war against Ukraine.

🚀 1/ Excited to share our (with Aydar Bulatov and @yurakuratov ) report on scaling Recurrent Memory Transformer to 2M (yes, two millions)😮 tokens! 🧠🌐 #AI #NLP #DeepLearning

2/ 📈 We've tackled the quadratic complexity of attention in #Transformers by combining token-based memory & segment-level recurrence, using RMT.

🔸 RMT adapts to any Transformer family model

🔸 Memory tokens provide the recurrent connection 🎛️💡 #AI #NLP #DeepLearning

🔸 RMT adapts to any Transformer family model

🔸 Memory tokens provide the recurrent connection 🎛️💡 #AI #NLP #DeepLearning

3/ 🧠 We tested RMT's memorization capabilities with synthetic datasets requiring fact memorization, detection, & reasoning. The model must separate facts from irrelevant text and use them to answer questions in a 6-class classification. 🎯 #AI #NLP #DeepLearning

Le gouvernement (service PEReN) a publié un document pédagogique décrivant comme fonctionnent les IA conversationnelles comme ChatGPT #GPT4, en voici une synthèse rapide. 1/9

🧠 Les Large Language Models (LLM) sont d'impressionnants réseaux de neurones qui révolutionnent le Traitement Automatique des Langues (TAL ou NLP). Ce sont des modèles statistiques qui imitent le fonctionnement du cerveau humain pour traiter et comprendre le langage. #NLP 2/9

🏗️ Les réseaux de neurones sont structurés en couches, chaque couche détectant des motifs récurrents (patterns) dans les données. Les neurones contiennent des paramètres qui sont ajustés au cours de l'entraînement pour améliorer les performances. #MachineLearning 3/9

1/10 Working with LLMs is exciting & powerful, but there are few things I wish I had known getting started with prompt engineering.

Here are 8 tips for getting the most out of @Cohere's Generate, #ChatGPT, #GPT3, and other text generation models🧵👇🏻

🎬

Here are 8 tips for getting the most out of @Cohere's Generate, #ChatGPT, #GPT3, and other text generation models🧵👇🏻

🎬

2/10

I am using @CohereAI 's Generate to illustrate my points.

1. Start small!

Don’t try to do too much at once. Give the model simple, straightforward tasks and gradually build up to more complex ones.

A great example of a simple instruction can start with: "Write a ....".

I am using @CohereAI 's Generate to illustrate my points.

1. Start small!

Don’t try to do too much at once. Give the model simple, straightforward tasks and gradually build up to more complex ones.

A great example of a simple instruction can start with: "Write a ....".

3/10

2. Experiment and iterate.

Make a rule of experimenting with different versions of your prompt to see what works best for your model. Even small tweaks can do wonders for the quality of your output. 🧪👩🔬

2. Experiment and iterate.

Make a rule of experimenting with different versions of your prompt to see what works best for your model. Even small tweaks can do wonders for the quality of your output. 🧪👩🔬

Dzisiaj 🧵 o KONSTYTUCJI AI!! To nie będzie żadne science fiction, tylko opis tego, co dzieje się w trzewiach Google i OpnenAI. Warto sięgnąć do mojej poprzedniej 🧵 o tym, jak uczy się sieć neuronowa.

#AI #Openai #ChatGPT #AnthropicAI #transformers

#AI #Openai #ChatGPT #AnthropicAI #transformers

1/ Upublicznienie przez OpenAI modelu ChatGPT wywołało duże poruszenie. O ile w naszym światku technologicznym już od co najmniej 2-3 lat było wiadomo, że istnieją modele o takich możliwościach, to jednak takie firmy jak Google bały się dać otwarty dostęp do nich.

2/ Istnieje co najmniej kilka firm, które dysponują modelami podobnymi do ChatGPT, a możliwe, że nawet lepszymi. Jedną z takich firm jest Google, o czym @T_Smolarek stworzył bardzo ciekawą 🧵:

The GPT of Robotics? RT-1

RT-1 is a 2y effort to bring the power of open-ended task-agnostic training with a high-capacity architecture to the Robotic world.

The magic sauce? A big and diverse robotic dataset + an efficient Transformer-based architecture

🧵

RT-1 is a 2y effort to bring the power of open-ended task-agnostic training with a high-capacity architecture to the Robotic world.

The magic sauce? A big and diverse robotic dataset + an efficient Transformer-based architecture

🧵

Hora do resumão do 1º Dia da #CCXP22! Pedro Pascal, Indiana Jones, Kevin Feige, Avatar e muito mais. Segue o fio e veja como foram os principais fatos da largada do evento! #PHnaCCXP

Fernando Meirelles comemora os 20 anos de #CidadedeDeus e revela que série derivada do filme está em desenvolvimento. #PHnaCCXP

A Paramount Pictures divulgou o trailer de #Transformers: O Despertar das Feras. Serão três novos filmes da franquia. #PHnaCCXP

@nixtlainc started last year as a side project

Today we reached 1 million downloads🎉

Our goal is to shake the time series industry and make state-of-the-art algorithms available for everyone 🔥

This is how we got there

🧵1/11

#reinventforecasting

Today we reached 1 million downloads🎉

Our goal is to shake the time series industry and make state-of-the-art algorithms available for everyone 🔥

This is how we got there

🧵1/11

#reinventforecasting

@nixtlainc's ecosystem consists (now) of 5 #python libraries

Focus: speed, scalability, and accuracy 🚀

Some features:

* @scikit_learn syntax

* Native support for #spark, @raydistributed, and @dask_dev

* Models! Eg #arima, #LightGBM, #NeuralNetworks, #transformers 🤖

2/11

Focus: speed, scalability, and accuracy 🚀

Some features:

* @scikit_learn syntax

* Native support for #spark, @raydistributed, and @dask_dev

* Models! Eg #arima, #LightGBM, #NeuralNetworks, #transformers 🤖

2/11

We are really proud of the open-source adoption

Repos from Amazon, Mozilla, and DataBricks use us as dependencies 🏄♂️

We have contributions from people working at H20, Microsoft, Google, Facebook, SalesForce, Oracle, Shopify, AT&T, Blueyonder, Stanford, MIT and UCL 🙏

3/11

Repos from Amazon, Mozilla, and DataBricks use us as dependencies 🏄♂️

We have contributions from people working at H20, Microsoft, Google, Facebook, SalesForce, Oracle, Shopify, AT&T, Blueyonder, Stanford, MIT and UCL 🙏

3/11

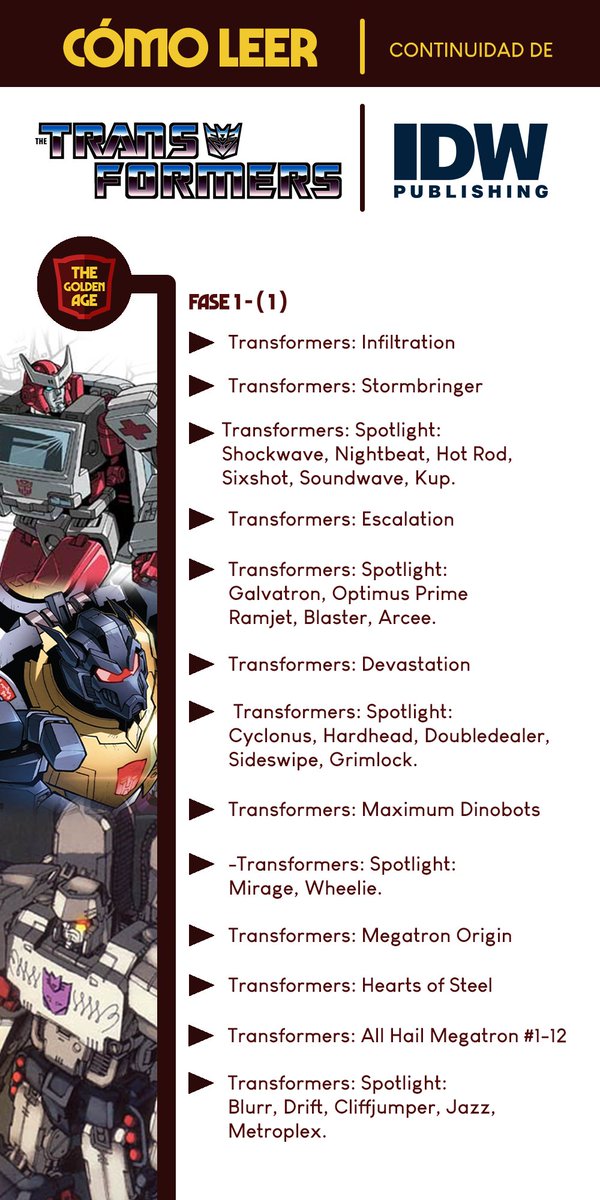

- Orden de lectura de IDW - 1/2

Este es el ideal, creado por la TFWiki, es el mas cómodo que hay, no vayan a usar el cronológico de por ahi, porque te hace empezar leyendo basura (que se retconea luego).

Link de los comics: mega.nz/folder/o1Z2BIJ…

#Goldenposting #Transformers

Este es el ideal, creado por la TFWiki, es el mas cómodo que hay, no vayan a usar el cronológico de por ahi, porque te hace empezar leyendo basura (que se retconea luego).

Link de los comics: mega.nz/folder/o1Z2BIJ…

#Goldenposting #Transformers

- AVISO -

cometimos un pequeño error en la segunda imagen, se repite Stormbringer, ignoren eso, una disculpa.

- @SrUltimate2000

cometimos un pequeño error en la segunda imagen, se repite Stormbringer, ignoren eso, una disculpa.

- @SrUltimate2000

NLP Roadmap 2022 with free resources.

This is what you need to build real-world NLP Projects and a Good Foundation. A Thread 🧵👇

This is what you need to build real-world NLP Projects and a Good Foundation. A Thread 🧵👇

🎯 Text Pre-Processing (Use #spacy):

👉 Tokenization

👉 Lemmatization

👉 Removing Punctuations and Stopwords etc.

👉 Tokenization

👉 Lemmatization

👉 Removing Punctuations and Stopwords etc.

1/ Hearing about #BERT and wondering why #radiologists are starting to talk more about this Sesame Street character?

Check out this #tweetorial from TEB member @AliTejaniMD with recommendations to learn more about #NLP for radiology.

Check out this #tweetorial from TEB member @AliTejaniMD with recommendations to learn more about #NLP for radiology.

2/ #BERT is a language representation model taking the #NLP community by storm, but it’s not new! #BERT has been around since 2018 via @GoogleAI. You may interact with #BERT everyday (chatbots, search tools).

But #BERT is relatively new to healthcare. What makes it different?

But #BERT is relatively new to healthcare. What makes it different?

3/ For starters, #BERT is “bidirectional”: it doesn’t rely on input text sequence (left-to-right or right-to-left). Instead, #Transformer-based architecture provides an #attention mechanism that help the model learn context based on ALL surrounding words.

Okay, so I may be insane to do this... but here are all my Micromaster designs so far, in a single thread. Enjoy. (Comments and thoughts and crits super welcome!) #transformers #interplanetarywrestling (Part 1 of 34)

Tracing the origin of the term "Combiners". It starts with g1 tech specs where "Combines with x to form x" was a standard phrase. Although as you can see from Hot Spot's bio it wasn't universal. There's no universal term for them in G1 at all #transformers (1/10)

There are a few official terms for certain kinds of combiners in Generation 1. "Special Teams" Appears to have been the intended term for the scramble-style combiners in the US, and was used extensively in the UK. #transformers (2/10)

There's a gap here in my knowledge -- I haven't gone back through Marvel comics and checked for the term combine (which does appear) or combiner (which I don't think appears). Here's some examples of how combiners are referred to, but it's not definitive. #transformers (3/10)

1/ "Software is eating the world. Machine learning is eating software. Transformers are eating machine learning."

Let's understand what these Transformers are all about

#DataScience #MachineLearning #DeepLearning #100DaysOfMLCode #Python #pythoncode #AI #DataAnalytics

Let's understand what these Transformers are all about

#DataScience #MachineLearning #DeepLearning #100DaysOfMLCode #Python #pythoncode #AI #DataAnalytics

2/ #Transformers architecture follows Encoder and Decoder structure.

The encoder receives input sequence and creates intermediate representation by applying embedding and attention mechanism.

#DataScience #MachineLearning #DeepLearning #100DaysOfMLCode #Python #pythoncode #AI

The encoder receives input sequence and creates intermediate representation by applying embedding and attention mechanism.

#DataScience #MachineLearning #DeepLearning #100DaysOfMLCode #Python #pythoncode #AI

3/ Then, this intermediate representation or hidden state will pass through the decoder, and the decoder starts generating an output sequence.

#DataScience #MachineLearning #DeepLearning #100DaysOfMLCode #Python #pythoncode #AI #DataScientist #DataAnalytics #Statistics

#DataScience #MachineLearning #DeepLearning #100DaysOfMLCode #Python #pythoncode #AI #DataScientist #DataAnalytics #Statistics

🔥 My weekly #newsletter: "Tech & Innovation Radar - Issue #121" - #AI #ArtificialIntelligence #MachineLearning #Blockchain #Space #NASA #CyberSecurity #QuantumComputing #Tech #Innovation #hacking #DataLeak

bit.ly/38DdiPX

bit.ly/38DdiPX

- Un système de stockage moléculaire de l'énergie solaire permettant une restitution sous forme d'électricité ... beau potentiel

Over the past several months, I’ve been doing a deep-dive into transformer language models #NLProc and their applications in #psychology for the last part of my #PhD thesis. 👩🏻🎓 Here are a few resources that I’ve found invaluable and really launched me forward on this project 🧵:

🏃🏻♀️💨 If you already have some data and you want a jump start to classify it all using #transformers, check out @maria_antoniak’s #BERT for Humanists/Computational Social Scientists Talk: Colab notebook and other info: bertforhumanists.org/tutorials/#cla…

🤓 I’m an absolute nerd for stats and experiment design in psych, but doing #ML experiments is very different. A clear, detailed (and reasonable length) course to get up to speed on train-test splits, hyperparameter tuning, etc. and do the very best work: coursera.org/learn/deep-neu…

Proud proud proud. In 9 part thread 👇🏼… it was only three years ago that #CrazyRichAsians was released… This weekend @henrygolding stars in his first big super hero movie #SnakeEyes as one of the most iconic heroes ever created and a ninja very close to my heart (go see it!)

Later this year @GemmaChan is the star in @MarvelStudios #Eternals in what looks to be the start of a whole new chapter in the Marvel Cinematic Universe… (2/9)

Later this summer we will be seeing @awkwafina star alongside #MichelleYeoh and @ronnychieng in @MarvelStudios #ShangChi (3/9)

"Attention is all you need" is one of the most cited papers in last couple of years. What is attention? Let's try to understand in this thread.

Paper link: arxiv.org/abs/1706.03762

#DeepLearning #MachineLearning #Transformers

Paper link: arxiv.org/abs/1706.03762

#DeepLearning #MachineLearning #Transformers

In Self-attention mechanism, we are updating the features of a given point, with respect to other features. The attention proposed in this paper is also known as Scaled dot-product attention.

Lets say, our data point is a single sentence, we embed each word into some d-dimensional space, so we compute how each point is similar to each other point, and weigh its representation accordingly. The similarity matrix is just a scaled dot product!

Today, would like to share the thought process and inspiration behind building the South Gujarat's first #Coworking @ikoverk

Coworking Spaces are Plug and Play offices for #Startups, #Freelances, #Consultants and #SMEs but we wanted to build something more- a Community (1/n)

Coworking Spaces are Plug and Play offices for #Startups, #Freelances, #Consultants and #SMEs but we wanted to build something more- a Community (1/n)

As you enter, you with find a Large Front desk with volunteers to guide you and onboard you for a 7 Days Free Trial amidst the aroma of Coffee and chirping of fellow coworkers discussing new ideas.

#Connect #Collaborate #Celebrate is the motto.

#Connect #Collaborate #Celebrate is the motto.

On the Front desk, you fill an intuitive Map of @ikoverk along with Sign which reads - "Remember, when you enter the door to the Right, you will enter the War Room" because everyone here is a hustler, an innovator and a true warrior.

Me hace muchísima ilusión presentaros el curso de verano que organizamos @andortizg y un servidor para @UNIAuniversidad:

🤖💻🧠

Introducción práctica a la Inteligencia Artificial y el Deep Learning.

🤖💻🧠

unia.es/inteligencia.a…

#IA #DL #ST

Procedo a vender la moto :)

🤖💻🧠

Introducción práctica a la Inteligencia Artificial y el Deep Learning.

🤖💻🧠

unia.es/inteligencia.a…

#IA #DL #ST

Procedo a vender la moto :)

Inteligencia Artificial… Redes Neuronales…

¡¡LAS MÁQUINAS NOS QUITAN EL TRABAJO!!

Dos campos permean las ciencias en el siglo XXI: la #estadística y la #computación. Combinados, dan lugar al Aprendizaje Automático, el Machine Learning. O como hacer que las máquinas "aprendan"

¡¡LAS MÁQUINAS NOS QUITAN EL TRABAJO!!

Dos campos permean las ciencias en el siglo XXI: la #estadística y la #computación. Combinados, dan lugar al Aprendizaje Automático, el Machine Learning. O como hacer que las máquinas "aprendan"

Como cuento en los monólogos, las máquinas más bien nos quitan "trabajo".

El Machine Learning es herramienta fundamental para automatizar procesos, y hoy en día no se puede entender sin las redes neuronales (la base del Deep Learning #DL).

El Machine Learning es herramienta fundamental para automatizar procesos, y hoy en día no se puede entender sin las redes neuronales (la base del Deep Learning #DL).

Here's a portfolio thread for #Transformers fans. It's a peak at some of my contributions to Fun Pub era of Botcon. I'd mock up my own ideas, collabs with @PlayWithThisToo & others there. Some got made, some didn't. Let's start with stuff that got made. Long thread incoming...

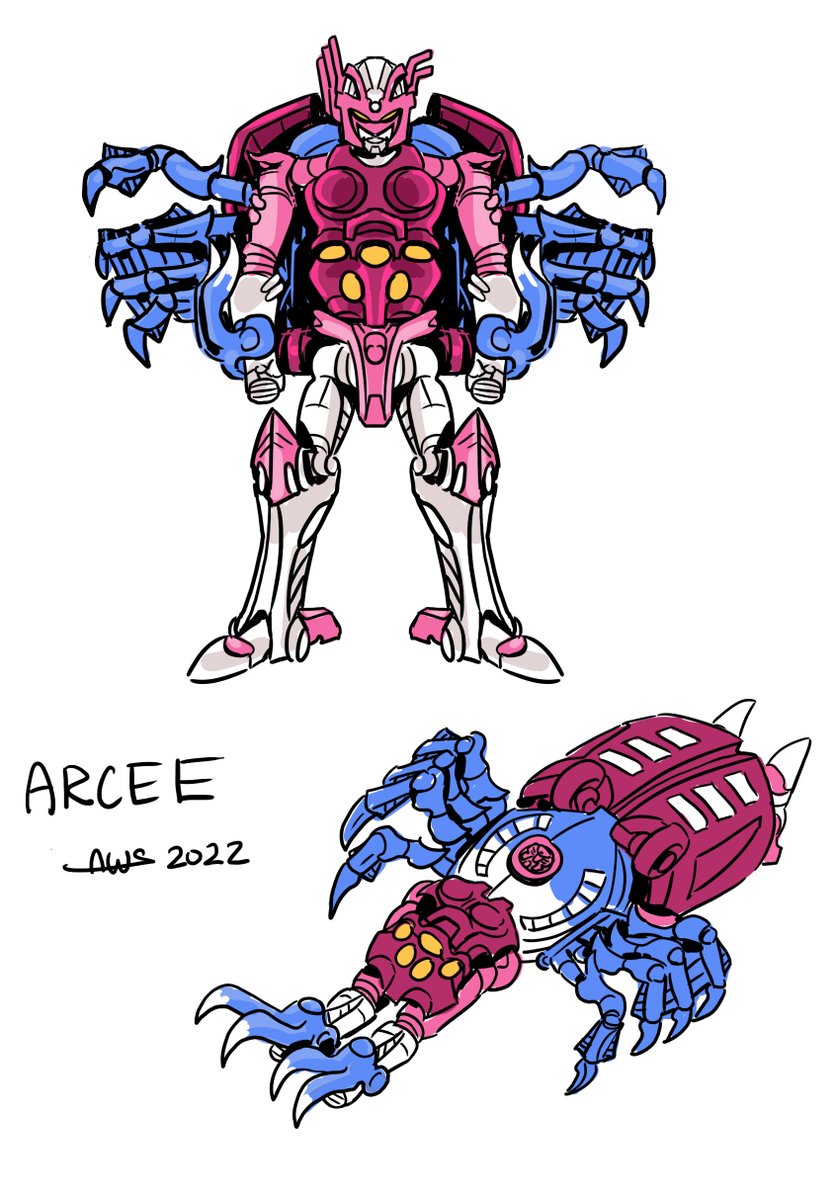

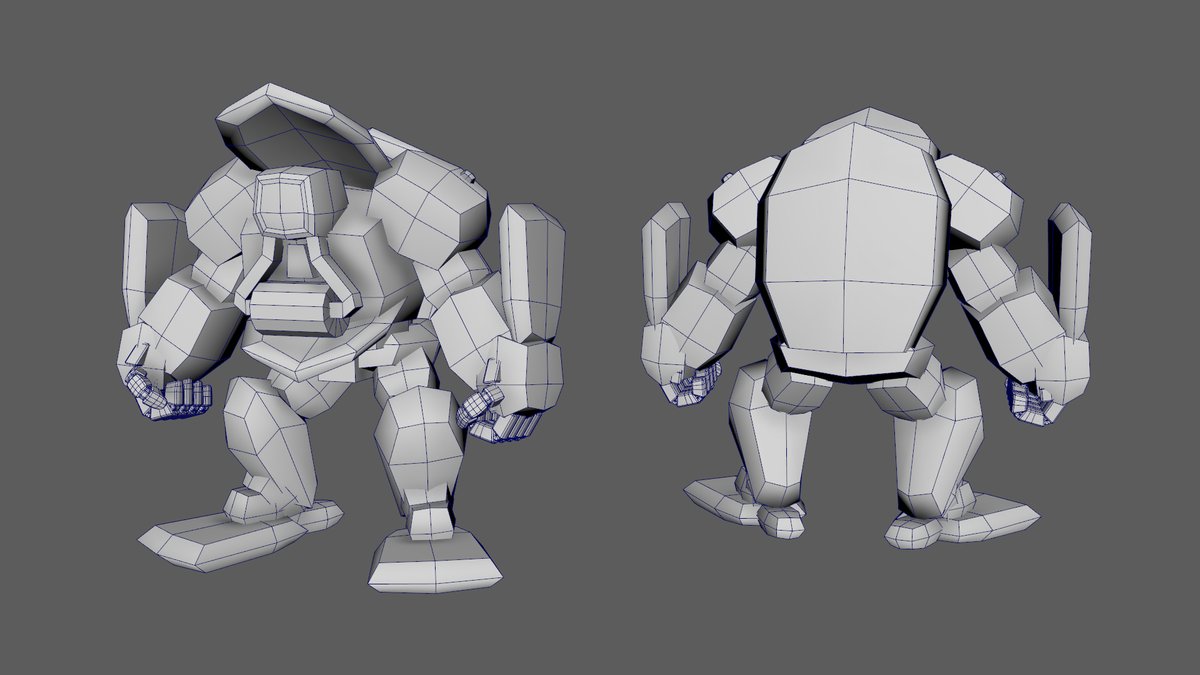

Beast Wars Movie Diver Blocking W.I.P. #Transformers #fanart #トランスフォーマー #3D #3dmodeling #3Dモデル #イラスト

@baltmatrix @Peaugh Hope you like it !

I would love to do some designs for the official Beast Wars movie...